We're Eventually Going To Get Some Awful Movie Written By A.I., Aren't We?

Until recently, the Artificial Intelligence revolution seemed like a distant reality — a topic for stoned "Joe Rogan Experience" guests to discuss between the coming singularity and the fact they finally found the horn. But in just the last year, AI has seemingly become ubiquitous and the nightmare scenario of a world running off the collective hum of intelligent machines has edged alarmingly closer.

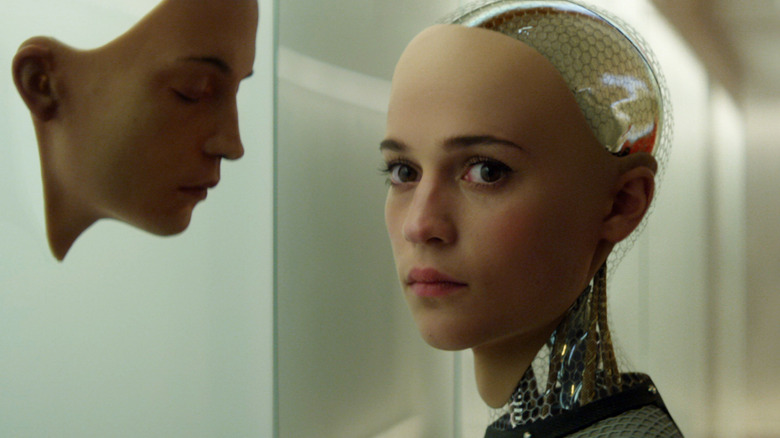

To be clear, we obviously haven't hit Skynet levels yet, but so-called "narrow" AI (AI capable of handling a specific task such as playing chess) — that's proliferating as I write, which presents enough of its own problems. Last year I found myself scrolling Instagram and liking several posts that appeared to be original paintings, only to discover after reading some captions that these were, in fact, all created by machines. And that's just the start.

In the last few weeks there's been just as significant a controversy over AI-generated Drake and The Weeknd collabs, which caused a stir when they hit the internet, prompting Universal Music to issue takedown notices and the industry in general to panic. Worst of all, people seemed to love these hollow approximations of actual songs, with the prevailing sentiment on Twitter appearing to be "If it sounds good, I'll listen."

Now, the film and TV industry is in the spotlight, with "Avengers: Endgame" director Joe Russo just last week saying he thinks AI is the future of storytelling. Meanwhile, AI has played a role in the Writers Guild of America (WGA) strike, after the Guild requested the tech be regulated, only for studios to rebuff their proposal. Which means, whether we like it or not, it seems we're headed for some kind of god-forsaken movie written entirely by an AI language model. And yes, that's as bleak as it sounds.

What's the latest fuss about?

The WGA voted to strike after negotiations with the Alliance of Motion Picture and Television Producers (AMPTP) failed to lead to agreement on a number of proposals to establish a new Minimum Basic Agreement (MBA). The Guild has now published a full list of these proposals, complete with the AMPTP's response to each. And if you take a look at the second page, there's a section that calls for the studios to "regulate [the] use of artificial intelligence on MBA covered projects: AI can't write or rewrite literary material; can't be used as source material; and MBA-covered material can't be used to train AI." According to the WGA document, the AMPTP rejected this proposal and instead offered to hold "annual meetings to discuss advancements in technology."

In other words, the studios are seemingly keen to see what AI can do in the TV and film writing space, and they don't want some pesky agreement on workers' rights messing that up. In a statement published on the WGA website, the Guild lamented the AMPTP's "stonewalling on free work for screenwriters and on AI for all writers," and for good reason.

The union isn't attempting to outright ban the use of the technology. Instead, the proposal is designed to prevent studios from assigning credit to an AI language model as the creator of "literary material" or "source material" — two important terms when it comes to credits and the residual compensation those credits necessitate. That means, as Variety reported in March, that under the proposal, while things like OpenAI's popular ChatGPT could theoretically be used in the writing process, it would only be treated "as a tool — like Final Draft or a pencil — rather than as a writer." And Hollywood doesn't seem to like that idea.

Yes, AI is already a problem

If you pay any attention to the discourse on AI, it's clear that even while experts can't agree on exactly how close we are to so-called Artificial General Intelligence (AGI) — something like human intelligence within a machine — narrow AI presents enough challenges in and of itself.

In a March episode of Sam Harris' "Making Sense" podcast, NYU Professor Gary Marcus commented, "With whatever it is that we have now, this kind of approximative intelligence that we have now, this mimicry that we have now, the lesson of the last month or two [is that] we don't really know how to control even that." He went on to add, "I am worried about whether we have any control over the things that we're doing, whether the economic incentives are going to push us in the right place."

Language models are a technology designed to, as Marcus put it, mimic. That essentially means they're fed vast amounts of data to train the system to output responses to prompts, which in turn mimic the language, syntax, and general tone of the original data. Thus far, the most prominent example of such a model, ChatGPT, has proven itself useful in all sorts of ways. Just on an anecdotal level, I know people who've been using it to write cover letters, and a producer friend who's been particularly excited about how much easier it makes finding stories for documentaries. Elsewhere, there are threads on Twitter teaching you how to use ChatGPT as a workable replacement for a lawyer — from actual lawyers. All of which makes this current generation of AI tech seem useful if slightly surreal. But there are far bigger issues looming, especially when it comes to the use of AI in creative spaces.

Could AI really write a movie by itself?

If the furor over AI art was any indication, the clash between the film and TV industry and AI technology is going to be an all-timer. If you happened to catch the abomination that was the AI-generated Wes Anderson "Star Wars" trailer, or any of the discourse around it, you'll have some idea of what I'm talking about. But the issue isn't necessarily how good AI currently is at mimicking existing material. It's how good it will be in the future at mimicking our own capacity for storytelling. And it's this that seems to be one of many sticking points in the WGA negotiations.

The Guild, for its part, seemingly recognizes the potential and the inevitably of AI and how, just like in so many industries, it will undoubtedly play a role going forward. But the union is seeking to limit its usage in such a way that it won't be allowed to replace actual writers, at least in terms of who gets the credit and ultimately, who gets paid for their work. Hollywood, for their part, appear more keen to keep things open-ended, rejecting the WGA's proposal (among many others) and seemingly setting themselves up to potentially use AI in whatever way they see fit going forward.

Without limitations on AI being adopted into the MBA, studios would be free to churn out whatever AI-created dross they please. And considering the alarming advancements in the technology which have plunged us into this sewer of artificial artistry in recent months, a fully AI movie doesn't seem like that distant a reality. Which is, honestly, a huge bummer.

The worst part

In his recent comments, Joe Russo seemed positively delighted at the thought of AI replacing actual writers, heralding the "democratization of storytelling." I'm not sure what kind of user cases Russo is envisioning here, but having a machine write an entire script seems like the opposite of democratization. It seems like it's literally confiscating something important from the people and handing it over to machines. Aside from the economic considerations that entails, this bleak scenario also raises major questions about what art is and how we consume it.

/Film's Jeremy Smith has already elegantly argued that Russo's vision of an entertainment industry ruled by AI is an unequivocal garbage future. And, to be candid, this is the point at which I've struggled with this issue. To return to the AI art example, I was at one time gleefully scrolling Instagram, liking AI art posts, completely unaware they were the products of unfeeling machines. Once I realized, I couldn't help but think of a core memory of taking a day trip with my family to the National Gallery in London to the Eugène Delacroix exhibit. I distinctly remember my dad telling me not to overthink, and to just take in each painting and see if I had an emotional response. And since then, that's pretty much been the gauge by which I judge if art is effective or not, or even whether it is or isn't "art": does it make me feel something?

In this hypothetical future where AI is writing all our movies, the thing that unsettles me most is the thought that these machines could create art that reliably produces an emotional response. And just like my AI Instagram art experience, we wouldn't know the difference. Worse, we'd find ourselves not caring.

Artificial life imitating art

Anyone with an ounce of artistic sensibility tends to balk at the idea of AI-generated art, whether it's visual, written, or otherwise. But why? As long as you experience an emotional response to art, does it matter who or what made it? Aren't we all just using the organic-ware of our own brains to soak up knowledge, then using that knowledge to create something new? What's the difference between that and these AI language models using hardware to soak up data sets and spitting out new "creations?" Well, there is a fundamental aspect to artistic creation that goes beyond whether the end product elicits an emotional response, and one that a machine can never replicate.

Art has a completely unique ability to make us feel more connected. It is the closest we'll ever get to accomplishing the otherwise impossible task of sharing our minds with others. We're all locked into our own subjective experience of the world and the feelings that arise from it. But by creating art, we can share that experience and those feelings, reminding others that we're not going through the experience of consciousness alone. Effective art has a point of view. It has something to say about the human condition. It isn't just knowledge regurgitated. It's not just mimicking pre-existing data. It's the only outlet we have for expressing the things that can't be conveyed otherwise. And it's this that makes the prospect of an entirely AI-written movie, or any AI-generated piece of "art," completely meaningless.

What does an AI movie even mean?

AI-created products simply can't recreate this most fundamental aspect of art. Which is why a completely AI-written movie just wouldn't mean anything. What does a movie written by something that has never known what it's like to be human mean? Even if the machine cobbles together enough convincingly profound sentences to provoke some deep thought or emotional response, it's not based on anything at all. It's hollow mimicry of profundity.

As professor Gary Marcus explained in his "Making Sense" appearance, the AI language models that threaten to become advanced enough to write entire scripts unaided are not unquestionably not conscious:

"It has not figured out that the language is about a world, and the world has things in it, and there are things that are true about the world, there are things that are false about the world [...] and it hasn't figured out any of that stuff [...] These systems never think and figure out, they're just finding close approximations to the texts that they've seen."

How can something that doesn't know there's even a world to write about create something genuinely meaningful? It seems fairly obvious that it can't. Unfortunately, as the Drake and The Weeknd debacle demonstrates, I'm not sure enough of us care about meaning for the nightmare movies of Joe Russo's dreams not to proliferate unhindered. If these early cases of AI art and music being celebrated tell us anything, it's that most people care more about the "how does it make you feel" aspect of art, rather than the "does it mean anything" part. Which is why this AI revolution isn't going to just suddenly stop.

The sad inevitability of AI movies

At this point, AI-generated movies are inevitable. The proverbial genie is out of the bottle, and Hollywood's aversion to the WGA's proposal to simply regulate its use hints at a bleak future ahead. In a weird way, it feels like we're already living in a world of artificially-created entertainment. Movies that seem like unfeeling byproducts of social media metric reviewing and market testing seem to crop up unendingly, usually designed to appeal to our nostalgia obsessed monoculture — the frankly soulless "Ghosted," with its MCU pandering, being one recent example.

And it's only going to get worse from here. If studios use AI language models to create entire scripts, why would you pay a human being to write something as mechanical and trite as "Ghosted"? Imagine greenlighting that film and being able to just feed all the tests and metrics you're working from into a machine and telling it to write a romantic comedy that appeals to the data. No need to pay anyone and you'll have your script in minutes. What's more, we've played a part in the whole thing by lapping up this mechanical nonsense every time it's presented, essentially telling Hollywood to give us more. Well, now we're gonna get it.

But just because this is our reality, doesn't mean we can't push back. When the first completely AI-written movie does arrive, there will be enough people tweeting about how it "actually goes hard." There'll be enough crypto bros and tech fetishists arguing that it's the future of art. Joe Russo will be exalted as the king of this new hellscape and we'll all be forced to swallow this stuff simply as a result of it being unavoidably ubiquitous. All I'm saying is, let's not make it easy for them.